🧠 How Multi-Head Attention Works: From Biography to Contextual CV

This document explains the mechanics of Multi-Head Attention in Transformers, using the analogy of converting a general biography into a contextual CV tailored for a specific job application.

The Goal: From "Who I Am" to "Who I Am for this Job"

- Your Biography: The source of truth—our Input Embedding + Positional Encoding.

- The Job Application: Focus shifts based on context (e.g., Software Engineering Manager vs. Startup CTO).

- The Contextual CV: Tailored highlights relevant to the specific role, like Contextual Vectors.

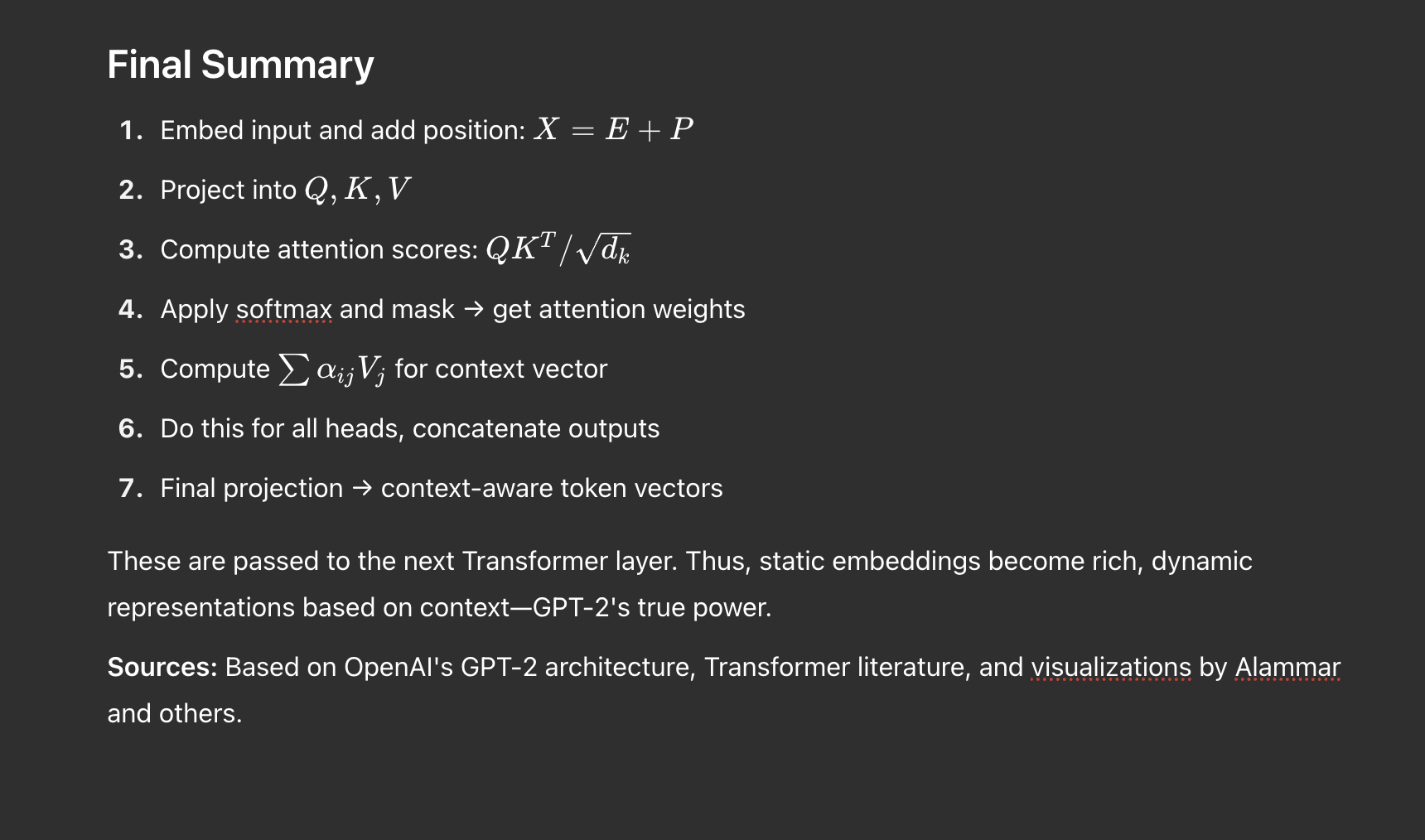

Step 1: The Input - Your Biography as a Matrix

- Tokenization: "I led a team that built a scalable API." → ["I", "led", "a", "team", "that", "built", "a", "scalable", "API", "."]

- Embedding: Each token becomes a 512-dimensional vector.

- Positional Encoding: Position vectors are added to embedding vectors.

Result: a (10, 512) Input Matrix (X).

| Token | Vector (Size 512) |

|---|---|

| "I" | [0.1, 0.8, ...] |

| "led" | [0.5, 0.2, ...] |

| "team" | [0.9, 0.1, ...] |

Step 2: The Attention Head - A "Perspective" on Your Biography

Each head has its own lens via Wq, Wk, Wv matrices. With d_model = 512 and h = 8:

- Q = X × Wq → (10, 64)

- K = X × Wk → (10, 64)

- V = X × Wv → (10, 64)

Q: the question. K: the description. V: the content.

Step 3: Calculating the Context - The Attention Score

- Scores = Q × KT → (10, 10)

- Scale: divide by √64 = 8

- Softmax applied row-wise → weights (probabilities)

Example: "led" might pay 70% attention to "team".

Step 4: Creating the Contextual Vector

Use the softmax weights to compute a weighted sum of V:

Z = Softmax_Scores × V → (10, 64)

"led" now means "leadership in the context of a team".

Step 5: Multi-Head Attention - Combining All Perspectives

Repeat steps 2–4 across 8 heads:

- Each head captures different perspectives (e.g., syntax, technical, leadership).

- Outputs concatenated → (10, 512)

- Final projection: Concat × Wo → (10, 512)

This results in the final Contextual CV – tailored, enriched embeddings per token.

A Deeper Dive: Two Heads, Two Perspectives

Let's make this more concrete. Imagine our input is "She is a great leader" and we have 2 attention heads. Each head has its own set of Wq, Wk, Wv matrices, allowing them to learn different types of relationships.

Head 1: The "Action/Role" Perspective

This head might learn to link pronouns to their roles. When processing the word "She", its Query (Q1) seeks out words defining her role. Through the dot product with all Key (K1) vectors, it finds the highest alignment with "leader".

Attention Weights for "She" (Head 1):

- is: 10%

- a: 5%

- great: 15%

- leader: 70%

The resulting context vector (Z1) for "She" is thus heavily influenced by the Value (V1) vector of "leader". The model understands "She" in the context of being a leader.

Head 2: The "Quality/Descriptor" Perspective

This head, with its different Wq, Wk, Wv matrices, might learn to focus on descriptive attributes. When processing "She", its Query (Q2) looks for adjectives or qualities. It discovers the highest alignment with the word "great".

Attention Weights for "She" (Head 2):

- is: 15%

- a: 5%

- great: 65%

- leader: 15%

The context vector (Z2) for "She" from this head is primarily a representation of the Value (V2) of "great". The model understands "She" in the context of being great.

Combining Perspectives

The model now has two different contextual understandings of "She":

- Z1: "She" as a "leader".

- Z2: "She" as "great".

These two vectors are concatenated: Concat(Z1, Z2). This combined vector is then passed through a final linear layer (Wo) to produce the final, enriched output vector for "She". This final vector simultaneously understands that "She" is both a "leader" and "great", capturing a much richer meaning than a single attention head could alone.

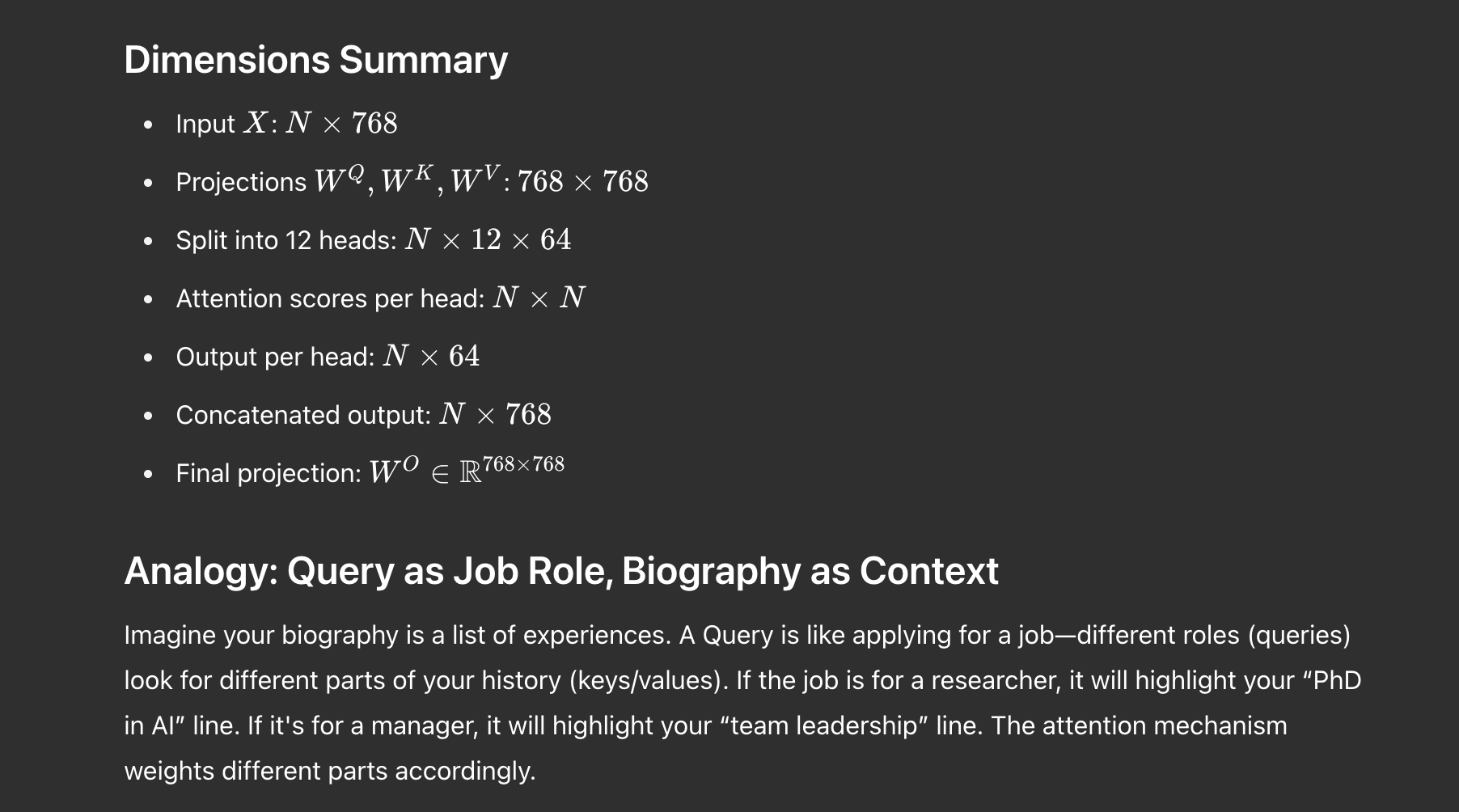

Why is the Concatenated Output 768-dimensional?

You might have seen models like BERT using a dimension of 768. This number isn't arbitrary; it's the result of combining the outputs of all attention heads. The core idea is to divide the model's total representational power into smaller, specialized subspaces (the heads) and then merge their findings.

Let's break it down with a standard example:

- Model Dimension (d_model): 768 (The total size of the embedding for each token).

- Number of Heads (h): 12 (A common choice).

- Splitting the Dimension: The model splits the 768-dimensional space across the 12 heads. The dimension for each head's Query, Key, and Value vectors (d_k, d_v) is calculated as:

d_v = d_model / h = 768 / 12 = 64 - Per-Head Processing: Each of the 12 heads independently produces a context vector (Z) of size 64 for each token.

- Concatenation: After all heads complete their work, their output vectors are concatenated side-by-side:

Concatenated Vector = [Z1, Z2, ..., Z12] - Final Size Calculation: The size of this concatenated vector is the sum of the sizes of all head outputs:

Total Size = h * d_v = 12 * 64 = 768

So, we arrive back at a 768-dimensional vector for each token. This vector, which has aggregated the "perspectives" from all 12 heads, is then passed through one final linear projection layer (Wo) to produce the final output of the multi-head attention block, which also has a dimension of 768, ready for the next layer in the Transformer.

How Does Concatenation Create Meaning? The "Team of Specialists" Analogy

This is an excellent question. Simply sticking vectors together doesn't automatically create a meaningful representation. The magic isn't in the concatenation itself, but in the final linear layer (Wo) that processes the combined vector.

Step 1: Each Head is a Specialist Providing a Report

Imagine you are a CEO making a critical decision and you consult a panel of 12 specialists: a financial analyst, a legal expert, a marketing guru, a technical lead, and so on. Each attention head is like one of these specialists. Because each head has its own unique Wq, Wk, and Wv matrices, it learns to focus on a specific type of relationship in the text.

- Head 1 (The Syntactician): Might focus on grammatical relationships. Its 64-dim output vector (Z1) for a word encodes its syntactic role (e.g., "this is the subject").

- Head 2 (The Descriptor Analyst): Might link nouns to their adjectives. Its output (Z2) encodes descriptive context (e.g., "this subject is described as 'great'").

- Head 3 (The Proximity Expert): Might track how far apart words are, learning about locality.

Each 64-dimensional Z vector is a concise "report" from one specialist.

Step 2: Concatenation is Laying All Reports on the Table

When you concatenate these vectors, you are not blending or averaging them. You are placing all 12 specialist reports side-by-side. The 768-dimensional vector is this "master table" of information.

Concat_Vector = [Report_from_Head1, Report_from_Head2, ..., Report_from_Head12]

The information from the "Syntactician" (Head 1) is preserved in dimensions 1-64. The information from the "Descriptor Analyst" (Head 2) is preserved in dimensions 65-128. The perspectives remain distinct and un-merged at this stage.

Step 3: The Final Linear Layer (Wo) is the CEO Making the Decision

This is the crucial step that creates the unified meaning. The concatenated 768-dim vector is passed through one final, learnable linear layer, represented by the weight matrix Wo.

This Wo matrix acts like the CEO. During training, it learns how to read the "master table" of concatenated reports and synthesize them into a single, coherent final output. It learns the complex interactions between the specialists' reports, figuring out the optimal way to mix, weigh, and combine the different perspectives. For instance, it might learn patterns like:

- "When the syntax head identifies a subject and the descriptor head identifies a positive quality for it, the final output should strongly emphasize the concept of 'capability'."

- "If the technical head flags a word as a 'database', pay less attention to the report from the 'poetry' head."

In summary, the concatenation is meaningful because it preserves the distinct perspectives from each specialized head. The subsequent linear layer (Wo) then intelligently synthesizes these diverse perspectives into a unified, high-dimensional representation that captures a rich and multi-faceted understanding of each token.